I am trying to understand when I should be using attributes or dimensions for storing 1-D arrays in time.

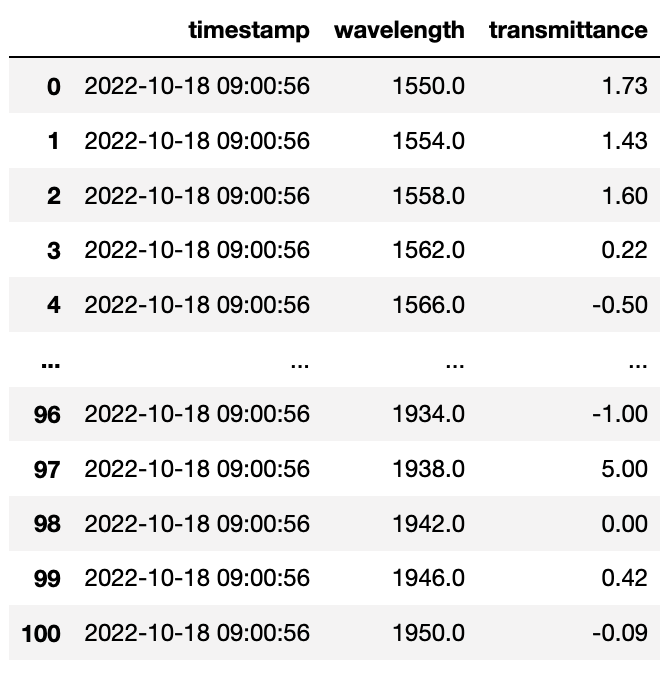

I have a 1-D array with n elements representing an IR spectra. At different points in time (at the second level) I will take a new scan with an IR sensor and get transmittance values at different wavelengths, and I want to add new scans as rows to a tileDB store. I am using SparseArrays since the sampling rate is sparse, but I know the start and end dates of my trial period.

I got the following to work (i.e: one dimension for time and 100 attributes for each wavelength):

# Create the one dimension

d1 = tiledb.Dim(name="timestamp",

domain=(strt_dt, end_dt),

tile=1000,

dtype="datetime64[s]")

# Create a domain using the two dimensions

dom1 = tiledb.Domain(d1)

# Create attributes for each wavelength attribute (wv is an array of the wavelengths I sampled at)

attrs = [tiledb.Attr(name=str(i), dtype=np.float64) for i in wv]

# URI

array_name = "/path/IRspectra"

# Create the array schema, setting `sparse=True` to indicate a sparse array

schema1 = tiledb.ArraySchema(domain=dom1,

sparse=True,

attrs=attrs)

# Create the array on disk (it will initially be empty)

tiledb.Array.create(array_name, schema1)

# Write some data:

data = dict(zip(wv.astype(str),spectra))

with tiledb.open(array_name,'w') as A:

# Write the data:

A[strt_dt] = data

But it seems to me that defining two dimensions; one for timestamp and the other for wavelength would make more intuitive sense. The code might change as follows:

# Create the two dimensions

d1 = tiledb.Dim(name="timestamp",

domain=(strt_dt, end_dt),

tile=1000,

dtype="datetime64[s]")

d2 = tiledb.Dim(name="wavelength",

domain=(0,99),

dtype=int)

# Create a domain using the two dimensions

dom = tiledb.Domain(d1,d2)

# Create attributes for each wavelength attribute (wv is an array of the wavelengths I sampled at)

attr = tiledb.Attr(name="transmittance", dtype=np.float64)

# URI

array_name = "/path/IRspectra"

# Create the array schema, setting `sparse=True` to indicate a sparse array

schema1 = tiledb.ArraySchema(domain=dom,

sparse=True,

attrs=[attr])

# Create the array on disk (it will initially be empty)

tiledb.Array.create(array_name, schema1)

# Write some data:

with tiledb.open(array_name,'w') as A:

# Write the data:

A[strt_dt, wv] = {"transmittance": spectra} # spectra is an array of transmittance values

but I get something like ValueError: value length (100) does not match coordinate length (1)

(admittedly I rewrote the code from memory as I erased it so not sure if that’s the exact error).

questions are: what did I do wrong in the second case? How should I structure this kind of array so that it best fits my use case?

EDIT:

error is IndexError: sparse index dimension length mismatch